Narrative Warfare in the Digital Age

Democracy as voting vs democracy as public reasoning

Jürgen Habermas famously traced the formation of a bourgeois democratic public sphere to the spread of coffee shops in European capitals, where white men assembled to read their newspapers and conversed about the daily news while sipping cups of coffee. The German philosopher made a fundamental contribution to democratic theory by insisting that the democratic health of modern polities not only depends on their constitutional provisions, but also on the informational infrastructure of public reasoning. Even if people can vote, how can they freely decide on political options if the information they access is biased and there is no space for the open exchange of opinions? Habermas insisted on the importance for democracy of creating spaces where individuals can comment and reason in public about the news they have consumed, relying on a professional press abiding by high-quality standards. In Europe today, this key distinction between democracy conceived as the act of electing a government through free elections and democracy conceived as voting following a public exercise in logical reason is still relevant. For instance, debates between those who denounce the attacks on the freedom of the press by Viktor Orbán’s government and those who defend his government as democratically elected and thus representing the “true” will of the people in a democratic Hungary echo this distinction between two distinct understandings of democratic government.

Despite their merits, Habermas’ essays on democratic theory, associating the birth of the modern informational infrastructure in modern democracies with the European spread of exclusively male coffee clubs, nevertheless deserve critical scrutiny. In particular, Habermas has been criticised for obscuring the darker realities of exclusion upon which this nineteenth-century informational order rested: first, the exclusion of women, and the spread of a sexist discourse justifying the exclusion of “emotions” from politics (and thus, of “women”, according to norms supporting patriarchy); second, the exclusion of colonial subjects, whose contribution to the rise of the coffee-shop culture was limited to the extraction of the requisite natural resources (coffee), leaving white men free to talk about the future of humanity within their small clubs of consumers of imperial goods. Since Habermas wrote his essays, feminist and postcolonial scholarship has deconstructed the notion that a “pure” form of democratic public reasoning existed in Europe from the mid-nineteenth to the early twentieth century, before the First World War tore that informational order into pieces by militarising all instruments of information production. Just as the agora of ancient Athenian democracy was based on the exclusion of women and slaves, modern European democracies were in practice based on the exclusion of suffragettes and colonial subjects.

From the nineteenth- to the twentieth-century imperial informational orders

The informational order of European “democracies” clearly changed during the twentieth century, first in the interwar era (until the Nazis marched on Stalingrad), and then in the post-war era (when the conflicts between blocs were intermingled with wars of decolonisation in what was once French Indochina and Algeria, Portuguese Angola, Americanised Cuba and much of the former British imperial territories, to cite only a few Cold War “hot spots”). On both sides, architects of modern propaganda used new technologies like cinema (from Eisenstein’s Battleship Potemkin to Leni Riefenstahl’s Triumph of the Will), investigative journalism (think of Paris Match’s motto “le poids des mots, le choc des photos”) or radio broadcasting (Radio Free Europe, for instance). When fighting wars of (de)colonisation in the 1950s, imperial powers financed massive “psychological action” campaigns oriented towards their former colonial subjects, who were told that new forms of imperial reconfiguration would secure their political rights in a postcolonial democracy strongly tied to the metropolis – the goal being to prevent the new nation-states from acceding to complete independence and to convince hesitant metropolitan public opinions that support for independence was not as obvious as the freedom fighters claimed. On the other hand, images of bodies burnt by napalm were disseminated to shock world public opinion and to call the United Nations to action: they were intended to elicit an emotional response and transnational mobilisation, calling for the immediate cessation of hostilities and/or of the atrocities associated with colonial warfare. In the age of humanitarian action, emotions could no longer be relegated to a space “outside” public reasoning; they had come to form an essential and legitimate component of informational warfare, necessary to mobilise public opinion and transform compliant masses into active democratic participants.

At the same time, some German philosophers, particularly from the Frankfurt school (such as Theodor Adorno and Max Horkheimer), highlighted the dangers of political and economic elites instrumentalising the media, entertainment and cultural industries to keep the masses in check. But without new informational techniques such as radio broadcasts or war photographers going to conflict zones and bringing their pictures to the world stage, emotions and humanitarian action would not have destabilised the cold and opaque tactics of imperial bureaucratic politics. Images and films exposed power, and abuses of power, then as now. Think of the pictures of torture sessions at Abu Ghraib prison, which shocked the world and marked a turning point in how world public opinion viewed the US occupation in post-Hussein Iraq. Images and movies have impacted public actions in the past 50 years of humanitarian interventions, as images of massacres drew the attention of Anglo-American professional broadcasters and took a central place on 24-hour news channels. While cultural biases may have not completely disappeared compared to the nineteenth-century informational age, emotions have become a legitimate object of discourse in public reasoning about foreign interventions.

The new twenty-first-century informational order underlying humanitarian interventions

The new informational order associated with the digitalisation of our information technologies represents another epochal change in the global information infrastructure of democratic government. The digitalisation of news production has called into question the Habermasian ideal of a dialogic public space, as it has potentially fragmented “the” public sphere into myriads of self-centred and strongly-bounded communication bubbles assembled by preference-matching algorithms. The media landscape, now made up of billions of blogs and social media pages, has entered a state of growing fragmentation never witnessed before. This is all the more worrying as it is linked with the decline of professional journalism and a new emphasis on the number of “clicks”, “views” and “likes” for each posted article, rather than on the number of sources collected, witnesses interviewed and experts consulted.

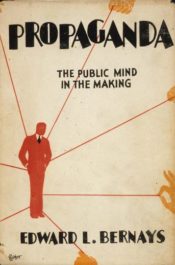

Rather than producing structured content, social media platforms churn out a daily avalanche of non-verified (and possibly fake) news, (mis)information, conspiracy theories and toxic headlines.Today, super-spreaders of “fake news” do not even know, nor even sometimes suspect, that the contents they re-post might not be “truths” and that they are participating in the transnational construction of “lies”. The distinction between “truthful” discourses and “lies” no longer holds. At least, traditional Cold War propaganda, based on outright and purposeful lying intended to produce maximum political effect, was produced by propagandists who knew the difference between truth and lies – and frequently privileged to spread the latter over the former. The ability to lie, after all, has since the Renaissance characterised the job of a diplomat, the “man of virtue sent abroad to lie for his country”. To describe the epistemic status of many news items posted today on social media, one may adopt the concept of “bullshit”, coined by philosopher Harry Frankfurt and further elaborated on by Fuad Zarbiyev in his contribution to this Dossier.

This is the age of alt-truth, or post-truth, when one can no longer differentiate lying from speaking the truth, and it has become increasingly difficult to identify a benchmark to separate the two. At the same time, it is also the age when millions of Twitter users have collectively decided to spend hours commenting on, and reasoning about, the news, elevating a new generation of social media consumers to a transnational active proto-citizenry that has rarely been seen in human history – albeit subject to a 280-character limit. The paradox is that this new age of informational infrastructure hesitates between these two developments: while millions spend more time trying to discern the truth from lies, millions of others no longer consider the possibility of distinguishing between the two at all. In a post-truth order characterised by the outright invalidity of any (or most) truth claims, logos – in the form of reasoned words, arguments and discourses – has, in many ways, been superseded by a nihilist maelstrom of temporary affects, hyperbolic (identity) tropes and ephemeral visual expressions (memes). As historical consciousness is crowded out by an ever-inflating present and différance becomes all-pervasive, information is no longer designed to convey meaning or hermeneutical cues but rather emotional comfort and apodictic certainties to “followers” or “believers”.

The unholy alliance of epistemic nihilism

While states are not the sole actors to run such disinformation campaigns – a multitude of other actors including political parties, terrorist groups, hackers, corporate entities, activists and civil society organisations undertake similar operations – they have nevertheless come to play an increasingly central role in the spread of disinformation. Authoritarian leaders and illiberal regimes worldwide are capitalising on the ambient epistemic nihilism, sharing an intrinsic interest in deconstructing the prevalent humanist paradigm to avoid scrutiny (for instance on human rights) and project their power more freely on the world stage. They sow epistemic chaos abroad through the spread of disinformation, capitalising on social media’s disregard for factual authenticity, its appetite for sensationalism and its ingrained tendency towards polarisation. Among states, Russia has been by far the most active, and in many ways archetypical, in the breadth and range of instruments it has deployed to spread disinformation abroad. Its intervention in the 2016 US presidential election is generally considered the ground zero of modern disinformation campaigns; other Russian campaigns have targeted Europe with the aim of sowing social discord and amplifying political tensions, notably by supporting the Brexit campaign or Catalonian separatism.[1] Russian businessman Yevgeny Prigozhin, now heading the infamous Wagner paramilitary organisation, was previously actively involved in disinformation campaigns targeting Libya in 2019 and again in 2020.[2] Appealing to emotions like anger, resentment and fear, these campaigns seek to undermine the social contract at the core of liberal-democratic societies by stirring up cynicism, paranoia and distrust.[3]

Most government-linked disinformation efforts remain focused on domestic audiences and are designed to suppress human rights, discredit political opponents and stifle dissent. When running disinformation campaigns abroad, states frequently hire reconverted secret service agents or PR firms to pollute message boards and chats, endlessly repeat stereotyped messages (astroturfing) or simply contradict, insult or discredit alternative viewpoints on public fora. This is the age of alt-truth, or post-truth, when one can no longer differentiate lying from speaking the truth, and it has become increasingly difficult to identify a benchmark to separate the two. Greatly aided in this undertaking by the postmodern assumption that all opinions or claims are equally valid, they seek to render democratic information ecosystems unintelligible by flooding them with noise, fake news, conspiracy theories and other types of nonsense. It is expected that more sophisticated AI, allowing users to produce deep fakes such as artificially generated profile pictures or manipulated videos, or, like ChatGPT, to generate endlessly similar but slightly differentiated “opinions”, will further increase the noxious effect of disinformation campaigns in the future.

Russia has not been alone in spreading disinformation internationally. Many other countries, including in the Global South, have launched their own disinformation campaigns, most notably Iran, Saudi Arabia, Philippines, North Korea and China[4] but also Thailand, Venezuela, Cuba and Israel. Since 2017, for instance, Saudi Arabia has created over 10,000 Twitter accounts to spread fake news and slander about Qatar, in particular concerning the latter’s alleged ties to the Muslim Brotherhood, with many accounts’ usernames assuming the identity of Qatari dissidents, royal family or interim government members. Other countries have until now been less active in launching disinformation campaigns abroad. China, for instance, has mostly used more traditional propaganda or soft power – not unlike European former imperial powers such as France or the United Kingdom – notably by sponsoring individual projects and Chinese heritage associations abroad and by setting up alternative multilateral organisations to promote a Sino-centred worldview and approach to multilateralism. While its huge army of informers has so far been active mostly on the domestic front, there are, however, signs that since the Hong Kong protests and the Covid pandemic, China may be increasingly tempted to copy Russian tactics to spread disinformation abroad.

Making social platforms accountable for their participation in the new informational order

Due to the commitment to freedom of expression and access to information provided by professional media organisations, liberal democracies can still have a comparative advantage today against disinformation campaigns seeking to undermine public dialogue and trust, two key pillars of contemporary democracies.The role of investigative journalists conducting “slow research” may never have been as important as it is today, when news so rapidly loses relevance – when it is “news” at all. Their role is more than ever necessary to curb the power of social media platforms engaged in a frenetic pursuit to amass and market gigantic sets of private data that have given rise to a new type of “surveillance capitalism”,[5] serving both neoliberal interests bent on pre-empting the formulation of progressive political projects, as well as illiberal governments using digital technologies to monitor their citizens with unprecedented reach and granularity. To convince us that “Expensive is Free”, to paraphrase George Orwell’s dystopian novel 1984, in which Big Brother declares that “War is Peace” or “Freedom is Slavery”, digital capitalists spend lavishly on lobbying firms to convince the US government and its allies that there is no alternative to letting them access our data and share it with the US national security agencies and their allies. But today, as we have seen in the case of Mark Zuckerberg when he was interrogated by the US Congress after revelations on Facebook’s role in the Cambridge Analytica scandal were shared with the public by a whistle-blower, democracies have the power – when they have the will – to tame the influence of new information technologies. The latter do not necessarily have to become “weapons of mass destruction”, if the billionaire owners of social media companies are held accountable for their business models. If held in check, social media companies might be prevented from analysing trillions of messages in order to sell their content to the highest bidder, be it a spy agency in the service of an authoritarian government or a company trying to maximise its revenue in return for advertising investments. If held to account for their actions, social media companies might stop working for regimes around the world that use disinformation campaigns, trolls and bots to influence and control their citizens, discredit or blackmail their opponents, or project power abroad, notably by interfering in foreign elections.

Information warfare and disinformation campaigns have become global issues, feeding on and nurturing a new post-truth reality based on alternative facts and, ultimately, eroding the liberal consensus on which international cooperation is based. Nowhere are the stakes of global (dis-information) campaigns better illustrated than in the media struggles over the presentation and interpretation of the unfolding war in Ukraine. The present Dossier thus seeks a better understanding of the nature of this new era of global disinformation, and how it differs from prior eras marked by the dissemination of more traditional propaganda (notably the Cold War) or by the spread of American (or liberal) soft power through mass media and consumption. It also raises the issue of what can be done to halt or reverse the noxious effects of disinformation campaigns and to recreate trust in the global information order. As observed by Irene Khan, the UN Special Rapporteur on the Promotion and Protection of the Right to Freedom of Opinion and Expression, “the information environment has become a dangerous, expanding theatre of conflict in the digital age. State and non-state actors, enabled by new technologies and social media platforms, have weaponised information to sow confusion, feed hate, incite violence, instigate public distrust and poison the information environment.”[6] This is not a definitive conclusion, but a political battle worth fighting for.

[1] Corneliu Bjola, “The ‘Dark Side’ of Digital Diplomacy: Countering Disinformation and Propaganda, ARI 5/2019, Real Instituto Elcano, 15 January 2019, https://www.realinstitutoelcano.org/en/analyses/the-dark-side-of-digital-diplomacy-countering-disinformation-and-propaganda/.

[2] Josh A. Goldstein and Shelby Grossman, “How Disinformation Evolved in 2020”, TechStream (Brooking), 4 January 2021, https://www.brookings.edu/techstream/how-disinformation-evolved-in-2020/.

[3] Christina la Cour, “Theorising Digital Disinformation in International Relations”, International Politics 57 (2020), https://doi.org/10.1057/s41311-020-00215-x.

[4] Corneliu Bjola, “The ‘Dark Side’ of Digital Diplomacy: Countering Disinformation and Propaganda, ARI 5/2019, Real Instituto Elcano, 15 January 2019, https://www.realinstitutoelcano.org/en/analyses/the-dark-side-of-digital-diplomacy-countering-disinformation-and-propaganda/.

[5] Shoshana Zuboff, “Big Other: Surveillance Capitalism and the Prospects of an Information Civilization”, Journal of Information Technology 30, no. 1 (2015), https://doi.org/10.1057/jit.2015.5.

[6] Report of the Special Rapporteur on the Promotion and Protection of the Right to Freedom of Opinion and Expression, A/77/288, 12 August 2022, https://www.ohchr.org/en/documents/thematic-reports/a77288-disinformation-and-freedom-opinion-and-expression-during-armed, p. 25.

-

1

Propaganda and Disinformation between East and West: A Long-Term Perspective

Propaganda and Disinformation between East and West: A Long-Term PerspectiveDuring the Cold War, the superpowers both had an interest in reducing political complexity to a simple, binary framework, highlighting certain facts in order to promote their respective narratives. In the post-Cold War era, argues Jussi Hanhimäki, facts themselves have become relative, attention spans have diminished and it is the soundbite that has taken on fundamental importance in political discourse.

-

2

The Politics of International Legal Justifications: On Truth, Lies and Bullshit

The Politics of International Legal Justifications: On Truth, Lies and BullshitIn today’s so-called “post-truth” era, politicians and media commentators frequently make statements that take patently obvious liberties with the truth. Exploring the growing prevalence of what philosopher Harry Frankfurt has termed “political bullshit”, Fuad Zarbiyev explores the implications of this lack of concern for the truth from the point of view of international law and of international affairs in general.

-

3

Interpreting Disinformation

Interpreting DisinformationThroughout history, political authority has frequently been underpinned by a certain element of mysticism: English and French monarchs, for example, would claim divine healing powers as late as the 17th century. Drawing on the work of historian Marc Bloch, Carolyn Biltoft explores the emergence of the so-called “royal touch,” taking this practice as a starting point to explore the transhistorical nature of disinformation in general.

-

4

Vulgar Vibes: The Atmospheres of the Global Disinformation Order

Vulgar Vibes: The Atmospheres of the Global Disinformation OrderIn a world where populist actors have become increasingly vocal – and increasingly vulgar – atmosphere and mood have come to play a key role in political discourse. Analysing this phenomenon with a focus on the Brazilian context, Anna Leander argues that the emerging discourse of vulgarity should itself be understood as a form of disinformation and should be studied accordingly.

-

5

The Propaganda War over Ukraine: Unanimity, on Both Sides?

The Propaganda War over Ukraine: Unanimity, on Both Sides?Western media opinion on the war in Ukraine has been characterised by a virtually unanimous condemnation of Russia’s invasion of its neighbour. In Russia, too, argues Andre Liebich, Vladimir Putin has enjoyed near unanimous support from the media for his “special military operation”, albeit thanks to the extensive use of intimidation and censorship.

-

6

“Dezinformatsiya” and Foreign Information Manipulation and Interference

“Dezinformatsiya” and Foreign Information Manipulation and InterferenceDating back to the Stalinist era and beyond, the history of Russian state-sponsored disinformation is long and varied. Jérôme Duberry reveals the complexities of the “disinformation ecosystem” in today’s Russia, from online disinformation campaigns employing bots, trolls and “cyborgs” to the use of state-funded media for the purpose of disseminating state-approved content.

-

7

The Politics of Information Manipulation in the 21st Century: A Case Study of the Islamic Republic of Iran

The Politics of Information Manipulation in the 21st Century: A Case Study of the Islamic Republic of IranIn the 44 years since the Iranian Revolution, the Islamic Republic has taken increasingly extreme measures to control the circulation of information at home and abroad. Clément Therme probes the extent and limitations of Iran’s strategy of information suppression, focussing in particular on the effects of these restrictions on researchers and journalists.

-

8

The Islamic State’s Virtual Caliphate

The Islamic State’s Virtual CaliphateSocial media played a crucial role in the establishment of an Islamic Caliphate in Iraq and Syria, and the Islamic State proved adept at using the Internet as a tool to promote its vision of a utopian nation. Matthew Bamber-Zryd analyses the Islamic State’s information strategy in the context of its successes and failures on the battlefield, as it moved from insurgency to nation-building and back again.

Healing the Global Information System

A first line of defence is legal, as governments have started to devise new laws and regulations to limit the financing of political ads or to make tech companies liable for hate speech (Germany’s network enforcement act NetzDG, for instance). Legal responses, however, need to be well calibrated, as they may otherwise trigger adverse effects such as undue censorship.

Other potential fixes are of a more technical order, for instance promoting fact-checking platforms such as The Trust Project and the Journalism Trust Initiative that tag news outlets with credibility indicators and denounce fake news. Social media platforms have also tried to step up their monitoring. However, these efforts are highly human resource intensive; in this respect, the vast layoffs in the aftermath of the pandemic have proven extremely problematic. While hate speech is relatively easy to identify, it is frequently exceedingly complex to tell apart disinformation and opinions that are authentic but unwittingly erroneous. Moreover, false information tends to outperform true information on social media platforms (see Corneliu Bjola’s article).

Another potential solution would involve overhauling the sales-oriented business model of the social media platforms, making access payable and de facto preventing fake outlets, trolls and bots from operating from free accounts.

It is readily apparent, however, that technical fixes alone will not suffice. Media and information sharing is as much about the simple transmission of messages as it is about making sense of the world. In many ways, it is a ritualistic process that plays an important role in the formation of identities and digital communities, allowing people to relate to diverse metanarratives. It has thus been argued that what was needed were not more repressive tools to limit the spread of false information but rather (a) to “make journalism great again” by supporting and enhancing the role of free, independent and diverse media and (b) to promote digital information literacy among the broader population to build resilience through critical thinking and self-knowledge. Some techno-optimists go one step further, claiming that the new epistemic configuration constitutes a unique opportunity to create new, more emancipated spaces of solidarity from below, based not on rational assumptions but on shared beliefs, hopes and emotions. From such a perspective, traditional information systems were flawed precisely because of their focus on logos and their sustaining of class, racial and gender injustices, and their demise could constitute an opportunity to create a global information system that is more inclusive and just. Fostering new positive, emotional frameworks, such as Lula’s campaign for love during his last presidential campaign, amounts to combatting disinformation by using its own tools against it.

PODCAST: The Right of Freedom in Armed Conflicts: A View from the United Nations, with Paige Morrow

RO Geneva Graduate Institute.

PODCAST: The link between political message and social context, with Michelle Weitzel

RO, Geneva Graduate Institute

DEFINITIONS | Some terms in the world of disinformation

An unfair practice of propaganda and manipulation used in the media and particularly on the internet, consisting of giving the impression of a mass phenomenon that emerges spontaneously when in reality it has been created from scratch to influence public opinion. (Source: Wiktionnaire, s.v. “astroturfing”.)

Computational propaganda involves the “use of algorithms, automation, and human curation to purposefully distribute misleading information over social media networks” (Woolley & Howard 2018). While propaganda has existed throughout human history, the rise of digital technologies and social media platforms have brought new dimensions to this practice (Source: Programme on Democracy & Technology of the Oxford Internet Institute, “What Is Computational Propaganda?”.)

A conspiracy theory is an explanation for an event or situation that asserts the existence of a conspiracy by powerful and sinister groups, often political in motivation, when other explanations are more probable. The term generally has a negative connotation, implying that the appeal of a conspiracy theory is based in prejudice, emotional conviction, or insufficient evidence. A conspiracy theory is distinct from a conspiracy; it refers to a hypothesised conspiracy with specific characteristics, including, but not limited to, opposition to the mainstream consensus among those who are qualified to evaluate its accuracy, such as scientists or historians. (Source: Wikipedia, “Conspiracy Theory”.)

Black propaganda is intended to create the impression that it comes from those it is supposed to discredit. Black propaganda contrasts with grey propaganda, which does not identify its source, as well as white propaganda, which does not disguise its origins at all. It is typically used to vilify or embarrass the enemy through misrepresentation. The major characteristic of black propaganda is that the audience are not aware that someone is influencing them, and do not feel that they are being pushed in a certain direction. This type of propaganda is associated with covert psychological operations. Black propaganda is the “big lie”, including all types of creative deceit. Black propaganda relies on the willingness of the receiver to accept the credibility of the source. (Wikipedia, “Black Propaganda”.)

Disinformation is false information deliberately spread to deceive people. It is sometimes confused with misinformation, which is false information but not deliberately so. Disinformation is presented in the form of fake news. Disinformation comes from the application of the Latin prefix dis- to information, to create the meaning “reversal or removal of information”. Disinformation attacks involve the intentional dissemination of false information, with an end goal of misleading, confusing, or manipulating an audience. They may be executed by political, economic or individual actors to influence state or non-state entities and domestic or foreign populations. These attacks are commonly employed to reshape attitudes and beliefs, drive a particular agenda, or elicit certain actions from a target audience. Tactics include the presentation of incorrect or misleading information, the creation of uncertainty, and the undermining of both correct information and the credibility of information sources. (Sources: Wikipedia, “Disinformation” and “Disinformation Attack”.)

Fake news

Fake news is false or misleading information presented as news. Fake news often has the aim of damaging the reputation of a person or entity, or making money through advertising revenue. Although false news has always been spread throughout history, the term fake news was first used in the 1890s when sensational reports in newspapers were common. Nevertheless, the term does not have a fixed definition and has been applied broadly to any type of false information. It has is also been used by high-profile people to apply to any news unfavourable to them. In some definitions, fake news includes satirical articles misinterpreted as genuine, and articles that employ sensationalist or clickbait headlines that are not supported in the text. Because of this diversity of types of false news, researchers are beginning to favour information disorder as a more neutral and informative term. (Source: Wikipedia, “Fake News”.)

IMT is a theory of deceptive discourse production, arguing that, rather than communicators producing “truths” and “lies”, the vast majority of everyday deceptive discourse involves complicated combinations of elements that fall somewhere in between these polar opposites; with the most common form of deception being the editing-out of contextually problematic information (i.e., messages commonly known as “white lies”). More specifically, individuals have available to them four different ways of misleading others: playing with the amount of relevant information that is shared, including false information, presenting irrelevant information, and/or presenting information in an overly vague fashion. (Source: Wikipedia, “Information Manipulation Theory”.)

Misinformation is incorrect or misleading information. It differs from disinformation, which is deliberately deceptive. Misinformation comes from the application of the Latin prefix mis- to information, to create the meaning “wrong of false information”. Rumours, by contrast, are information not attributed to any particular source, and so are unreliable and often unverified, but can turn out to be either true or false. Even if later retracted, misinformation can continue to influence actions and memory. People may be more prone to believe misinformation if they are emotionally connected to what they are listening to or are reading (Source: Wikipedia, “Misinformation”.)

Mute news is a pernicious form of information in which a key issue that often underlies the concerns of the public and the body politic is obscured from media attention. (Source: Lê Nguyên Hoang and Sacha Altay, “Disinformation: Emergency or False Problem?”, Polytechnique Insights, 6 September 2022.)

Propaganda is communication that is primarily used to influence or persuade an audience to further an agenda, which may not be objective and may be selectively presenting facts to encourage a particular synthesis or perception, or using loaded language to produce an emotional rather than a rational response to the information that is being presented. In the 20th century, propaganda was often associated with a manipulative approach, but historically, propaganda has been a neutral descriptive term of any material that promotes certain opinions or ideologies. (Source: Wikipedia, “Propaganda”.)

Typosquatting, also called URL hijacking, a sting site or a fake URL, is a form of cybersquatting, and possibly brandjacking, which relies on mistakes such as typos made by Internet users when inputting a website address into a web browser. Should a user accidentally enter an incorrect website address, they may be led to any URL (including an alternative website owned by a cybersquatter). (Source: Wikipedia, “Typosquatting”.)

PODCAST: The Difference between Russian and Ukrainian propaganda, Svitlana Ovcharenko

Research Office. Geneva Graduate Institute.

PODCAST: (Dis)Information as a tool of warfare, with Jean-Marc Rickli

Research office. Geneva Graduate Institute

PODCAST: Mobilization from below to face the control in authoritarian states, with G. O. and V. Neeraj

Research Office. Geneva Graduate Institute.